Google Cloud Certified Professional Data Engineer Exam Practice Test

Scaling a Cloud Dataproc cluster typically involves ____.

Answer : A

After creating a Cloud Dataproc cluster, you can scale the cluster by increasing or decreasing the number of worker nodes in the cluster at any time, even when jobs are running on the cluster. Cloud Dataproc clusters are typically scaled to:

1) increase the number of workers to make a job run faster

2) decrease the number of workers to save money

3) increase the number of nodes to expand available Hadoop Distributed Filesystem (HDFS) storage

You migrated your on-premises Apache Hadoop Distributed File System (HDFS) data lake to Cloud Storage. The data scientist team needs to process the data by using Apache Spark and SQL. Security policies need to be enforced at the column level. You need a cost-effective solution that can scale into a data mesh. What should you do?

Answer : D

For automating the CI/CD pipeline of DAGs running in Cloud Composer, the following approach ensures that DAGs are tested and deployed in a streamlined and efficient manner.

Use Cloud Build for Development Instance Testing:

Use Cloud Build to automate the process of copying the DAG code to the Cloud Storage bucket of the development instance.

This triggers Cloud Composer to automatically pick up and test the new DAGs in the development environment.

Testing and Validation:

Ensure that the DAGs run successfully in the development environment.

Validate the functionality and correctness of the DAGs before promoting them to production.

Deploy to Production:

If the DAGs pass all tests in the development environment, use Cloud Build to copy the tested DAG code to the Cloud Storage bucket of the production instance.

This ensures that only validated and tested DAGs are deployed to production, maintaining the stability and reliability of the production environment.

Simplicity and Reliability:

This approach leverages Cloud Build's capabilities for automation and integrates seamlessly with Cloud Composer's reliance on Cloud Storage for DAG storage.

By using Cloud Storage for both development and production deployments, the process remains simple and robust.

Google Data Engineer Reference:

Cloud Composer Documentation

Using Cloud Build

Deploying DAGs to Cloud Composer

Automating DAG Deployment with Cloud Build

By implementing this CI/CD pipeline, you ensure that DAGs are thoroughly tested in the development environment before being automatically deployed to the production environment, maintaining high quality and reliability.

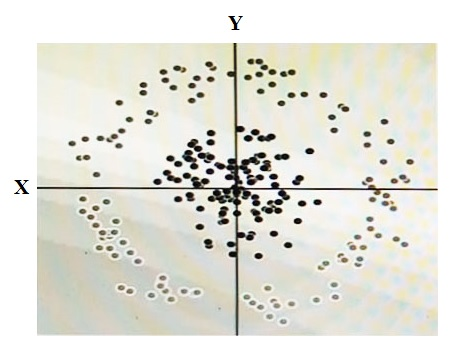

You have some data, which is shown in the graphic below. The two dimensions are X and Y, and the shade of each dot represents what class it is. You want to classify this data accurately using a linear algorithm.

To do this you need to add a synthetic feature. What should the value of that feature be?

Answer : D

You want to store your team's shared tables in a single dataset to make data easily accessible to various analysts. You want to make this data readable but unmodifiable by analysts. At the same time, you want to provide the analysts with individual workspaces in the same project, where they can create and store tables for their own use, without the tables being accessible by other analysts. What should you do?

Answer : C

The BigQuery Data Viewer role allows users to read data and metadata from tables and views, but not to modify or delete them. By giving analysts this role on the shared dataset, you can ensure that they can access the data for analysis, but not change it. The BigQuery Data Editor role allows users to create, update, and delete tables and views, as well as read and write data. By giving analysts this role at the dataset level for their assigned dataset, you can provide them with individual workspaces where they can store their own tables and views, without affecting the shared dataset or other analysts' datasets. This way, you can achieve both data protection and data isolation for your team.Reference:

BigQuery IAM roles and permissions

You are designing a data processing pipeline. The pipeline must be able to scale automatically as load increases. Messages must be processed at least once, and must be ordered within windows of 1 hour. How should you design the solution?

Answer : D

Flowlogistic's management has determined that the current Apache Kafka servers cannot handle the data volume for their real-time inventory tracking system. You need to build a new system on Google Cloud Platform (GCP) that will feed the proprietary tracking software. The system must be able to ingest data from a variety of global sources, process and query in real-time, and store the data reliably. Which combination of GCP products should you choose?

Answer : C

If you're running a performance test that depends upon Cloud Bigtable, all the choices except one below are recommended steps. Which is NOT a recommended step to follow?

Answer : A

If you're running a performance test that depends upon Cloud Bigtable, be sure to follow these steps as you plan and execute your test:

Use a production instance. A development instance will not give you an accurate sense of how a production instance performs under load.

Use at least 300 GB of data. Cloud Bigtable performs best with 1 TB or more of data. However, 300 GB of data is enough to provide reasonable results in a performance test on a 3-node cluster. On larger clusters, use 100 GB of data per node.

Before you test, run a heavy pre-test for several minutes. This step gives Cloud Bigtable a chance to balance data across your nodes based on the access patterns it observes.

Run your test for at least 10 minutes. This step lets Cloud Bigtable further optimize your data, and it helps ensure that you will test reads from disk as well as cached reads from memory.