Amazon AWS Certified DevOps Engineer - Professional DOP-C02 Exam Practice Test

A DevOps engineer needs to back up sensitive Amazon S3 objects that are stored within an S3 bucket with a private bucket policy using S3 cross-Region replication functionality. The objects need to be copied to a target bucket in a different AWS Region and account.

Which combination of actions should be performed to enable this replication? (Choose three.)

A DevOps engineer is building an application that uses an AWS Lambda function to query an Amazon Aurora MySQL DB cluster. The Lambda function performs only read queries. Amazon EventBridge events invoke the Lambda function.

As more events invoke the Lambda function each second, the database's latency increases and the database's throughput decreases. The DevOps engineer needs to improve the performance of the application.

Which combination of steps will meet these requirements? (Select THREE.)

Answer : A, C, E

Verified Answer: A, C, and E

Short To improve the performance of the application, the DevOps engineer should use Amazon RDS Proxy, implement the database connection opening outside the Lambda event handler code, and connect to the proxy endpoint from the Lambda function.

The DevOps engineer should connect to the proxy endpoint from the Lambda function, which is a unique URL that represents the proxy. This can allow the Lambda function to access the database through the proxy, which can provide benefits such as connection pooling, load balancing, failover handling, and enhanced security.

The other options are incorrect because:

Implementing database connection pooling inside the Lambda code is unnecessary and redundant when using Amazon RDS Proxy, which already provides connection pooling as a service.

Implementing the database connection opening and closing inside the Lambda event handler code is inefficient and costly, as it can increase latency and consume more resources for each invocation of the Lambda function.

Connecting to the Aurora cluster endpoint from the Lambda function is not optimal for read-only queries, as it can direct traffic to either the primary instance or one of the Aurora Replicas in the DB cluster. This can result in inconsistent performance and potential conflicts with write operations on the primary instance.

A company uses a pipeline in AWS CodePipeline to deploy an application. The company created an AWS Fault Injection Service (AWS FIS) experiment template to test the resiliency of the application. A DevOps engineer needs to integrate the experiment into the pipeline.

Which solution will meet this requirement?

Answer : C

A company runs a website by using an Amazon Elastic Container Service (Amazon ECS) service that is connected to an Application Load Balancer (ALB). The service was in a steady state with tasks responding to requests successfully. A DevOps engineer updated the task definition with a new container image and deployed the new task definition to the service. The DevOps engineer noticed that the service is frequently stopping and starting new tasks because the ALB health checks are failing. What should the DevOps engineer do to troubleshoot the failed deployment?

Answer : A

A company's web app runs on EC2 with a relational database. The company wants highly available multi-Region architecture with latency-based routing for global customers.

Which solution meets these requirements?

Answer : A

ALBs with Auto Scaling across AZs ensure high availability in each Region.

Aurora global database supports cross-Region read replicas with low latency for reads and is recommended for multi-Region high availability.

Route 53 latency-based routing sends users to the closest Region.

RDS without global database (Options B, D) has higher replication lag and less resilience.

Using CloudFront in front of ALBs (Options C and D) adds caching but is not required for latency-based routing and increases complexity.

A company is implementing a standardized security baseline across its AWS accounts. The accounts are in an organization in AWS Organizations. The company must deploy consistent IAM roles and policies across all existing and future accounts in the organization. Which solution will meet these requirements with the MOST operational efficiency?

Answer : B

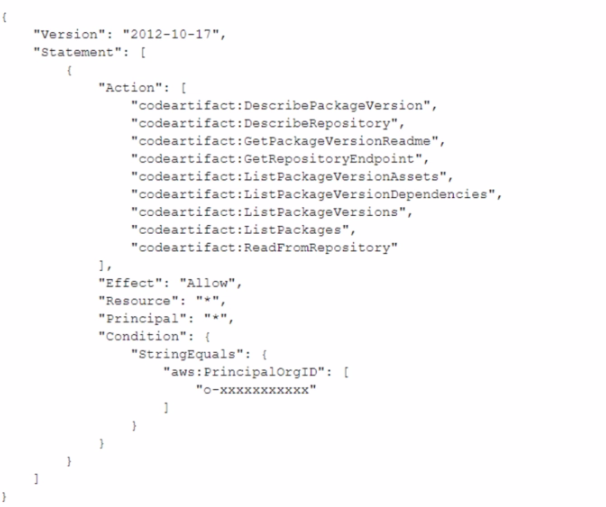

A company uses AWS CodeArtifact to centrally store Python packages. The CodeArtifact repository is configured with the following repository policy.

A development team is building a new project in an account that is in an organization in AWS Organizations. The development team wants to use a Python library that has already been stored in the CodeArtifact repository in the organization. The development team uses AWS CodePipeline and AWS CodeBuild to build the new application. The CodeBuild job that the development team uses to build the application is configured to run in a VPC Because of compliance requirements the VPC has no internet connectivity.

The development team creates the VPC endpoints for CodeArtifact and updates the CodeBuild buildspec yaml file. However, the development team cannot download the Python library from the repository.

Which combination of steps should a DevOps engineer take so that the development team can use Code Artifact? (Select TWO.)

Answer : A, D

'AWS CodeArtifact operates in multiple Availability Zones and stores artifact data and metadata in Amazon S3 and Amazon DynamoDB. Your encrypted data is redundantly stored across multiple facilities and multiple devices in each facility, making it highly available and highly durable.' https://aws.amazon.com/codeartifact/features/ With no internet connectivity, a gateway endpoint becomes necessary to access S3.