Amazon AWS Certified Solutions Architect - Professional SAP-C02 Exam Questions

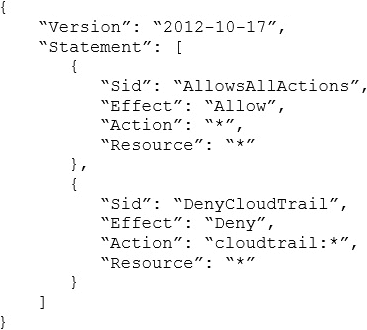

A company with several AWS accounts is using AWS Organizations and service control policies (SCPs). An Administrator created the following SCP and has attached it to an organizational unit (OU) that contains AWS account 1111-1111-1111:

Developers working in account 1111-1111-1111 complain that they cannot create Amazon S3 buckets. How should the Administrator address this problem?

Answer : C

However A's explanation is incorrect -https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scps.html

'SCPs are similar to AWS Identity and Access Management (IAM) permission policies and use almost the same syntax. However, an SCP never grants permissions.'

SCPs alone are not sufficient to granting permissions to the accounts in your organization. No permissions are granted by an SCP. An SCP defines a guardrail, or sets limits, on the actions that the account's administrator can delegate to the IAM users and roles in the affected accounts. The administrator must still attach identity-based or resource-based policies to IAM users or roles, or to the resources in your accounts to actually grant permissions. The effective permissions are the logical intersection between what is allowed by the SCP and what is allowed by the IAM and resource-based policies.

A company hosts its primary API on AWS using Amazon API Gateway and AWS Lambda functions. Internal applications and external customers use this API. Some customers also use a legacy API hosted on a standalone EC2 instance.

The company wants to increase security across all APIs to prevent denial of service (DoS) attacks, check for vulnerabilities, and guard against common exploits.

What should a solutions architect do to meet these requirements?

Answer : C

C is correct because:

AWS WAF integrates natively with API Gateway and protects against common web exploits (e.g., SQL injection, XSS).

Amazon Inspector can scan the legacy EC2 instance for known vulnerabilities.

Amazon GuardDuty is a continuous security monitoring service that detects threats butdoes not blocktraffic (B and D are incorrect because GuardDuty doesn't block).

AWS WAF Overview

Amazon Inspector Overview

Amazon GuardDuty Overview

An online magazine will launch its latest edition this month. This edition will be the first to be distributed globally. The magazine's dynamic website currently uses an Application Load Balancer in front of the web tier, a fleet of Amazon EC2 instances for web and application servers, and Amazon Aurora MySQL. Portions of the website include static content and almost all traffic is read-only.

The magazine is expecting a significant spike in internet traffic when the new edition is launched. Optimal performance is a top priority for the week following the launch.

Which combination of steps should a solutions architect take to reduce system response times for a global audience? (Choose two.)

Answer : D, E

A company consists of two separate business units. Each business unit has its own AWS account within a single organization in AWS Organizations. The business units regularly share sensitive documents with each other. To facilitate sharing, the company created an Amazon S3 bucket in each account and configured two-way replication between the S3 buckets. The S3 buckets have millions of objects.

Recently, a security audit identified that neither S3 bucket has encryption at rest enabled. Company policy requires that all documents must be stored with encryption at rest. The company wants to implement server-side encryption with Amazon S3 managed encryption keys (SSE-S3).

What is the MOST operationally efficient solution that meets these requirements?

Answer : A

'The S3 buckets have millions of objects' If there are million of objects then you should use Batch operations. https://aws.amazon.com/blogs/storage/encrypting-objects-with-amazon-s3-batch-operations/

A financial services company runs a complex, multi-tier application on Amazon EC2 instances and AWS Lambda functions. The application stores temporary data in Amazon S3. The S3 objects are valid for only 45 minutes and are deleted after 24 hours.

The company deploys each version of the application by launching an AWS CloudFormation stack. The stack creates all resources that are required to run the application. When the company deploys and validates a new application version, the company deletes the CloudFormation stack of the old version.

The company recently tried to delete the CloudFormation stack of an old application version, but the operation failed. An analysis shows that CloudFormation failed to delete an existing S3 bucket. A solutions architect needs to resolve this issue without making major changes to the application's architecture.

Which solution meets these requirements?

Answer : D

This option allows the solutions architect to use a DeletionPolicy attribute to specify how AWS CloudFormation handles the deletion of an S3 bucket when the stack is deleted1.By setting the value of Delete, the solutions architect can instruct CloudFormation to delete the bucket and all of itscontents1. This option does not require any major changes to the application's architecture or any additional resources.

Deletion policies

A company owns a chain of travel agencies and is running an application in the AWS Cloud. Company employees use the application to search for information about travel destinations. Destination content is updated four times each year.

Two fixed Amazon EC2 instances serve the application. The company uses an Amazon Route 53 public hosted zone with a multivalue record of travel.example.com that returns the Elastic IP addresses for the EC2 instances. The application uses Amazon DynamoDB as its primary data store. The company uses a self-hosted Redis instance as a caching solution.

During content updates, the load on the EC2 instances and the caching solution increases drastically. This increased load has led to downtime on several occasions. A solutions architect must update the application so that the application is highly available and can handle the load that is generated by the content updates.

Which solution will meet these requirements?

Answer : A

This option allows the company to use DAX to improve the performance and reduce the latency of the DynamoDB queries by caching the results in memory1.By updating the application to use DAX, the company can reduce the load on the DynamoDB tables and avoid throttling errors1.By creating an Auto Scaling group for the EC2 instances, the company can adjust the number of instances based on the demand and ensure high availability2.By creating an ALB, the company can distribute the incoming traffic across multiple EC2 instances and improve fault tolerance3.By updating the Route 53 record to use a simple routing policy that targets the ALB's DNS alias, the company can route users to the ALB endpoint and leverage its health checks and load balancing features4.By configuring scheduled scaling for the EC2 instances before the content updates, the company can anticipate and handle traffic spikes during peak periods5.

What is Amazon DynamoDB Accelerator (DAX)?

What is Amazon EC2 Auto Scaling?

What is an Application Load Balancer?

Choosing a routing policy

Scheduled scaling for Amazon EC2 Auto Scaling

A company hosts an ecommerce site using EC2, ALB, and DynamoDB in one AWS Region. The site uses a custom domain in Route 53. The company wants toreplicate the stack to a second Regionfordisaster recoveryandfaster accessfor global customers.

What should the architect do?

Answer : A

A is correct because:

CloudFormation templatesenable repeatable infrastructure deployment.

Route 53 latency-based routingensures users hit the closest Region.

DynamoDB global tablesallow multi-Region, active-active replication of application data.

Manual console work (B) is not scalable.

C lacks EC2/ALB replication.

D adds unnecessary services like Beanstalk and doesn't scale cleanly.