CompTIA Data+ Certification DA0-001 Exam Practice Test

SIMULATION

The director of operations at a power company needs data to help identify where company resources should be allocated in order to monitor activity for outages and restoration of power in the entire state. Specifically, the director wants to see the following:

* County outages

* Status

* Overall trend of outages

INSTRUCTIONS:

Please, select each visualization to fit the appropriate space on the dashboard and choose an appropriate color scheme. Once you have selected all visualizations, please, select the appropriate titles and labels, if applicable. Titles and labels may be used more than once.

If at any time you would like to bring back the initial state of the simulation, please click the Reset All button.

Answer : A

This is a simulation question that requires you to create a dashboard with visualizations that meet the director's needs. Here are the steps to complete the task:

Drag and drop the visualization that shows the county outages on the top left space of the dashboard. This visualization is a map of the state with different colors indicating the number of outages in each county. You can choose any color scheme that suits your preference, but make sure that the colors are consistent and clear. For example, you can use a gradient of red to show the counties with more outages and green to show the counties with less outages.

Drag and drop the visualization that shows the status of the outages on the top right space of the dashboard. This visualization is a pie chart that shows the percentage of outages that are active, restored, or pending. You can choose any color scheme that suits your preference, but make sure that the colors are distinct and easy to identify. For example, you can use red for active, green for restored, and yellow for pending.

Drag and drop the visualization that shows the overall trend of outages on the bottom space of the dashboard. This visualization is a line graph that shows the number of outages over time. You can choose any color scheme that suits your preference, but make sure that the color is visible and contrasted with the background. For example, you can use blue for the line and white for the background.

Select appropriate titles and labels for each visualization. Titles and labels may be used more than once. For example, you can use ''County Outages'' as the title for the map, ''Status'' as the title for the pie chart, and ''Trend'' as the title for the line graph. You can also use ''County'', ''Number of Outages'', ''Active'', ''Restored'', ''Pending'', ''Time'', and ''Number of Outages'' as labels for the axes and legends of the visualizations.

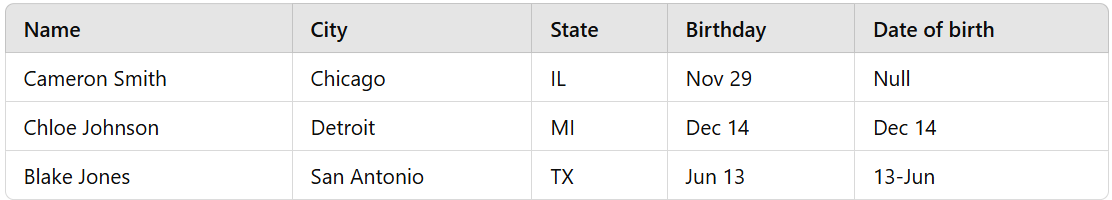

A data set has the following values:

Which of the following is the best reason for cleansing the data?

Answer : D

Comprehensive and Detailed In-Depth

In this dataset, we can see an issue withincomplete or missing data:

Cameron Smith's 'Date of Birth' field is Null, which indicatesmissing datathat needs to be filled in.

The format inconsistency in 'Date of Birth' (e.g., '13-Jun' vs. 'Dec 14') can also be problematic, requiring standardization.

Option A (Invalid data):Incorrect. The data is not necessarily invalid, but it is incomplete.

Option B (Redundant data):Incorrect. Redundant data means unnecessary duplication, which is not the case here.

Option C (Data outliers):Incorrect. Outliers refer to values that are extremely different from the rest of the dataset, which does not apply here.

Option D (Missing data):Correct.The 'Date of Birth' field has missing values (e.g., 'Null'), requiring data cleansing.

Which of the following is an example of PII?

Answer : B

A name is an example of personally identifiable information (PII), which is any data that can be used to identify someone, either on its own or with other relevant data. A name is a direct identifier, which means that it can uniquely identify a person without the need for any additional information.For example, a full name, such as John Smith, can be used to distinguish or trace an individual's identity1.

Other examples of direct identifiers include:

Social Security Number

Passport number

Driver's license number

Email address

Phone number

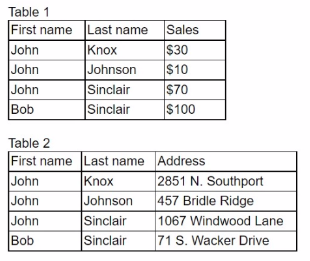

A data analyst has been asked to create one table that has each employee's first name, last name, sales, and address. The sales and addresses are listed in the tables below:

Which of the following steps should the analyst take to create the table?

Answer : D

Alex wants to use data from his corporate sale, CRM, and shipping systems to try and predict future sales.

Which of the following systems is the most appropriate?

Choose the best answer.

Answer : C

Correct answer: C. Data Warehouse.

Data warehouse bring together data from multiple systems used by an organization.

A data mart is too narrow, as Alex needs data from across multiple divisions.

OLAP is a broad term of analytical processing, and OLTP systems are transactional and not ideal for this task.

While reviewing survey data, an analyst notices respondents entered ''Jan,'' ''January,'' and ''01'' as responses for the month of January. Which of the following steps should be taken to ensure data consistency?

Answer : C

Filter on any of the responses that do not say ''January'' and update them to ''January''. This is because filtering and updating are data cleansing techniques that can be used to ensure data consistency, which means that the data is uniform and follows a standard format. By filtering on any of the responses that do not say ''January'' and updating them to ''January'', the analyst can make sure that all the responses for the month of January are written in the same way. The other steps arenot appropriate for ensuring data consistency. Here is why:

Deleting any of the responses that do not have ''January'' written out would result in data loss, which means that some information would be missing from the data set. This could affect the accuracy and reliability of the analysis.

Replacing any of the responses that have ''01'' would not solve the problem of data inconsistency, because there would still be two different ways of writing the month of January: ''Jan'' and ''January''. This could cause confusion and errors in the analysis.

Sorting any of the responses that say ''Jan'' and updating them to ''01'' would also not solve the problem of data inconsistency, because there would still be two different ways of writing the month of January: ''01'' and ''January''. This could also cause confusion and errors in the analysis.

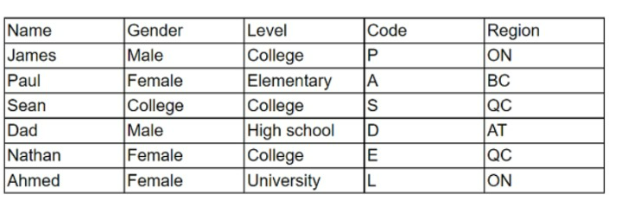

Given the table below:

Which of the following variables can be considered inconsistent, and how many distinct values should the variable have?

Answer : B

The table provided shows an inconsistency in the 'Gender' column, which lists three distinct values: Male, Female, and College. This is inconsistent because 'College' is not a gender category. The 'Gender' column should only have two distinct values, typically 'Male' and 'Female', to accurately represent gender data. This error could be due to a data entry mistake or a misclassification during data collection.

In data analysis, it's crucial to ensure that categorical variables like gender are consistent and correctly classified, as this can significantly impact the analysis results. Data cleaning processes often involve identifying and correcting such inconsistencies to maintain the integrity of the data set.

Data quality management principles emphasize the importance of consistency in data values, especially for categorical variables like gender1.

Best practices in data cleaning include checking for and rectifying inconsistencies or misclassifications in data sets2.

The importance of accurate data classification is highlighted in data analysis literature, as it directly affects the validity of the analysis results3.