Databricks Certified Data Analyst Associate Exam Practice Test

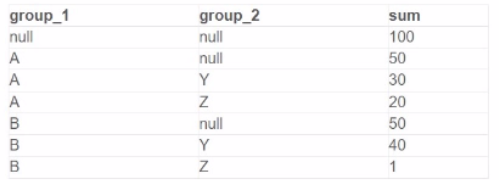

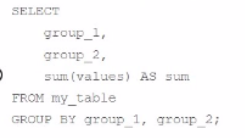

A data analyst is processing a complex aggregation on a table with zero null values and the query returns the following result:

Which query did the analyst execute in order to get this result?

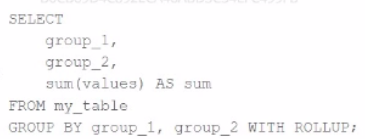

A)

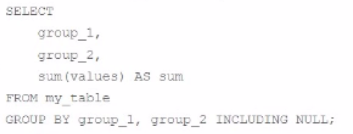

B)

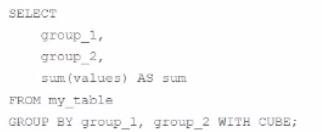

C)

D)

Answer : B

A stakeholder has provided a data analyst with a lookup dataset in the form of a 50-row CSV file. The data analyst needs to upload this dataset for use as a table in Databricks SQL.

Which approach should the data analyst use to quickly upload the file into a table for use in Databricks SOL?

Answer : A

What is used as a compute resource for Databricks SQL?

Answer : C

Which statement about subqueries is correct?

Answer : C

A data analyst has created a user-defined function using the following line of code:

CREATE FUNCTION price(spend DOUBLE, units DOUBLE)

RETURNS DOUBLE

RETURN spend / units;

Which of the following code blocks can be used to apply this function to the customer_spend and customer_units columns of the table customer_summary to create column customer_price?

Answer : E

A data analyst needs to share a Databricks SQL dashboard with stakeholders that are not permitted to have accounts in the Databricks deployment. The stakeholders need to be notified every time the dashboard is refreshed.

Which approach can the data analyst use to accomplish this task with minimal effort/

Answer : B

What does Partner Connect do when connecting Power Bl and Tableau?

Answer : A