Databricks Certified Data Engineer Professional Exam Practice Test

A data team is implementing an append-only Delta Lake pipeline that processes both batch and streaming data. They want to ensure that schema changes in the source data are automatically incorporated without breaking the pipeline.

Which configuration should the team use when writing data to the Delta table?

Answer : B

Comprehensive and Detailed Explanation From Exact Extract of Databricks Data Engineer Documents:

When writing data to Delta Lake tables, the option mergeSchema = true allows automatic evolution of the table schema by merging new columns or data types introduced in the incoming data with the existing table definition. This ensures pipelines remain resilient to upstream schema changes, especially in append-only or streaming ingestion scenarios. The Databricks documentation specifies that this option should be enabled for schema-on-write flexibility, while overwriteSchema replaces the existing schema entirely and ignoreChanges applies only to CDC operations. validateSchema ensures consistency and does not handle evolution. Therefore, B (mergeSchema = true) is the correct configuration for handling automatic schema evolution safely.

A Databricks job has been configured with 3 tasks, each of which is a Databricks notebook. Task A does not depend on other tasks. Tasks B and C run in parallel, with each having a serial dependency on Task A.

If task A fails during a scheduled run, which statement describes the results of this run?

The business reporting team requires that data for their dashboards be updated every hour. The total processing time for the pipeline that extracts, transforms, and loads the data for their pipeline runs in 10 minutes. Assuming normal operating conditions, which configuration will meet their service-level agreement requirements with the lowest cost?

Answer : B

Comprehensive and Detailed Explanation From Exact Extract:

Exact extract: ''Job clusters are created for a job run and terminate when the job completes.''

Exact extract: ''All-purpose (interactive) clusters are intended for interactive development and collaboration.''

An hourly batch that completes in ~10 minutes is best run on ephemeral job clusters so compute exists only during execution and auto-terminates afterward, minimizing cost while meeting the hourly SLA. Interactive clusters persist (incurring idle cost). Structured Streaming for an hourly refresh adds continuous compute cost with no benefit here. Event-driven triggers are unnecessary for a simple hourly SLA.

===========

The data engineering team is migrating an enterprise system with thousands of tables and views into the Lakehouse. They plan to implement the target architecture using a series of bronze, silver, and gold tables. Bronze tables will almost exclusively be used by production data engineering workloads, while silver tables will be used to support both data engineering and machine learning workloads. Gold tables will largely serve business intelligence and reporting purposes. While personal identifying information (PII) exists in all tiers of data, pseudonymization and anonymization rules are in place for all data at the silver and gold levels.

The organization is interested in reducing security concerns while maximizing the ability to collaborate across diverse teams.

Which statement exemplifies best practices for implementing this system?

Answer : A

This is the correct answer because it exemplifies best practices for implementing this system. By isolating tables in separate databases based on data quality tiers, such as bronze, silver, and gold, the data engineering team can achieve several benefits. First, they can easily manage permissions for different users and groups through database ACLs, which allow granting or revoking access to databases, tables, or views. Second, they can physically separate the default storage locations for managed tables in each database, which can improve performance and reduce costs. Third, they can provide a clear and consistent naming convention for the tables in each database, which can improve discoverability and usability. Verified Reference: [Databricks Certified Data Engineer Professional], under ''Lakehouse'' section; Databricks Documentation, under ''Database object privileges'' section.

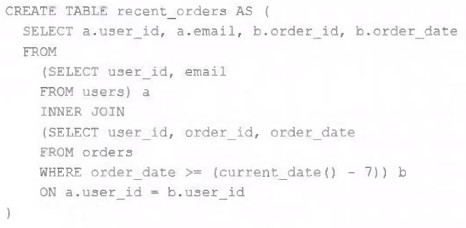

A table is registered with the following code:

Both users and orders are Delta Lake tables. Which statement describes the results of querying recent_orders?

Answer : B

A Databricks SQL dashboard has been configured to monitor the total number of records present in a collection of Delta Lake tables using the following query pattern:

SELECT COUNT (*) FROM table -

Which of the following describes how results are generated each time the dashboard is updated?

A data engineer is using Lakeflow Declarative Pipelines Expectations feature to track the data quality of their incoming sensor data. Periodically, sensors send bad readings that are out of range, and they are currently flagging those rows with a warning and writing them to the silver table along with the good data. They've been given a new requirement -- the bad rows need to be quarantined in a separate quarantine table and no longer included in the silver table.

This is the existing code for their silver table:

@dlt.table

@dlt.expect("valid_sensor_reading", "reading < 120")

def silver_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

What code will satisfy the requirements?

A.

@dlt.table

@dlt.expect("valid_sensor_reading", "reading < 120")

def silver_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

@dlt.table

@dlt.expect("invalid_sensor_reading", "reading >= 120")

def quarantine_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

B.

@dlt.table

@dlt.expect_or_drop("valid_sensor_reading", "reading < 120")

def silver_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

@dlt.table

@dlt.expect("invalid_sensor_reading", "reading < 120")

def quarantine_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

C.

@dlt.table

@dlt.expect_or_drop("valid_sensor_reading", "reading < 120")

def silver_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

@dlt.table

@dlt.expect_or_drop("invalid_sensor_reading", "reading >= 120")

def quarantine_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

D.

@dlt.table

@dlt.expect_or_drop("valid_sensor_reading", "reading < 120")

def silver_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

@dlt.table

@dlt.expect("invalid_sensor_reading", "reading >= 120")

def quarantine_sensor_readings():

return spark.readStream.table("bronze_sensor_readings")

Answer : A

Comprehensive and Detailed Explanation from Databricks Documentation:

Lakeflow Declarative Pipelines (DLT) supports data quality enforcement using @dlt.expect, @dlt.expect_or_drop, and @dlt.expect_all.

@dlt.expect applies a rule and records whether rows pass or fail the condition but does not drop failing rows. Instead, failing rows can be written to a quarantine table.

@dlt.expect_or_drop enforces that only rows passing the condition flow downstream, dropping bad records automatically.

In this case, the requirement is:

Good rows (reading < 120) go to the silver table.

Bad rows (reading >= 120) go to a quarantine table.

Bad rows should not be included in silver.

The correct implementation is Option A, where:

The silver table uses @dlt.expect to validate reading < 120. These rows flow normally.

The quarantine table applies an expectation for reading >= 120, ensuring bad records are captured separately.

Other options are incorrect:

Option B/D: These either use expect_or_drop incorrectly or apply wrong conditions, leading to dropped rows without quarantining properly.

Option C: Uses expect_or_drop for both tables, which would discard bad rows instead of persisting them into a quarantine table.

Thus, Option A meets the business requirement to split good and bad data streams while ensuring both are captured for auditing and processing.