Dell EMC Technology Architect, XtremIO Solutions Specialist E20-526 Exam Practice Test

A physical XtremIO Management Server (XMS) has failed and requires replacement. Which two software packages are required for recovery?

Answer : C

The first step is to re-install the XMS image, in the event it is a physical XMS then you may install an image via a USB flash drive or for a virtual XMS simply deploy the provided VMware OVA image.

The following step is to upload the XMS software to the images directory of the XMS and login with install mode ........

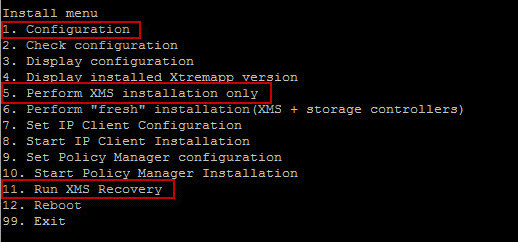

Once logged into the XMS console with xinstall then perform the following sequence of steps:

1. Configuration

5. Perform XMS installation only

11. Run XMS Recovery

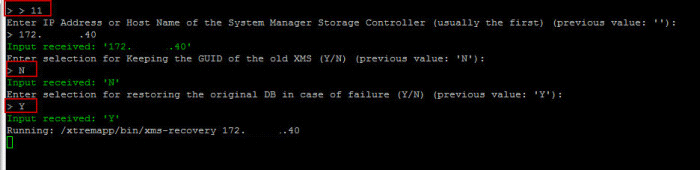

Options to choose when running the ''XMS Recovery'':

References:https://davidring.ie/2015/02/20/emc-xtremio-redeploying-xms-xtremio-management-server/

Which values are required to enter a generic workload into the XtremIO Sizing Tool?

Answer : C

IDC outlines a criteria some criteria for selecting a testing tool:

* Generate workloads

* Capture results for analysis:

Throughput

IOPS

Latency

References:http://emc.co/collateral/technical-documentation/h15280-euc-xendesktop-vsphere-xtremio-sg.pdf, page 87

http://info.xtremio.com/rs/xtremio/images/IDC_Flash_Array_Test_Guide.pdf, page 7

A customer has a VMware vSphere environment running Native Multipathing (NMP). Which path selection policy should be set for optimal performance when connected to an XtremIO cluster?

Answer : D

Configuring vSphere Native Multipathing.

For best performance, it is recommended to do the following:

Set the native round robin path selection policy on XtremIO volumes presented to the ESX host.

A customer has decided to use VMware Horizon View as their desktop virtualization technology. Their VDI environment will consist of XtremIO storage and ESXi hosts. They are looking for increased speed and low latencies while performing file copy operations.

What should the setting for VAAI XCOPY I/O size be set to in order to achieve this requirement?

Answer : C

The VAAI XCOPY I/O size of 256 kB gives the best performance. 4 MB is the default value.

References: https://www.emc.com/collateral/white-papers/h14279-wp-vmware-horizon-xtremio-design-considerations.pdf, page 57

A new 500 GB VM disk is created on a database that resides on an XtremIO LUN. The VMware administrator plans to provision the disk using the thick provisioned eager zeroed format.

How much physical XtremIO capacity will be allocated during this process?

Answer : D

XtremIO storage is natively thin provisioned, using a small internal block size. This provides fine-grained resolution for the thin provisioned space.

All volumes in the system are thin provisioned, meaning that the system consumes capacity only when it is actually needed. XtremIO determines where to place the unique data blocks physically inside the cluster after it calculates their fingerprint IDs. Therefore, it never pre-allocates or thick-provisions storage space before writing.

References: Introduction to the EMC XtremIO STORAGE ARRAY (April 2015), page 22

A customer wants to purchase an XtremIO array. After the array is installed, the customer wants to initially disable encryption. What is the result of enabling encryption on an array that is already being used?

Answer : A

Disable Encryption and Non-Encrypted Models

This version [Ver. 4.0.2-65] allows disabling the Data at Rest Encryption (DARE), if desired. Disabling and Enabling is done when the cluster is stopped.

References: https://samshouseblog.com/2015/12/29/new-features-and-changes-in-xtremio-ver-4-0-2-65/

As shown in the exhibit, a customer's environment is configured as follows:

What should be the host queue depth setting per path?

Answer : C

The queue depth is perLUN, and not perinitiator. Here there are 64 LUNs, each visible through 4 paths, which would indicate that 256 is a good choice for the queue depth setting.

Note: As a general advice, for optimal operation with XtremIO storage, consider the following: Set the queue depth to 256.

References:https://www.emc.com/collateral/white-paper/h14583-wp-best-practice-sql-server-xtremio.pdf