Google Cloud Associate Data Practitioner Exam Practice Test

Your company has several retail locations. Your company tracks the total number of sales made at each location each day. You want to use SQL to calculate the weekly moving average of sales by location to identify trends for each store. Which query should you use?

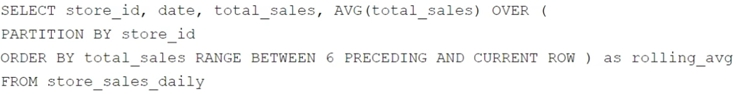

A)

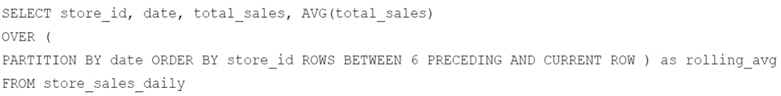

B)

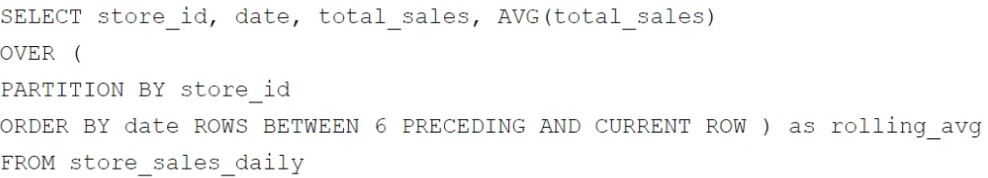

C)

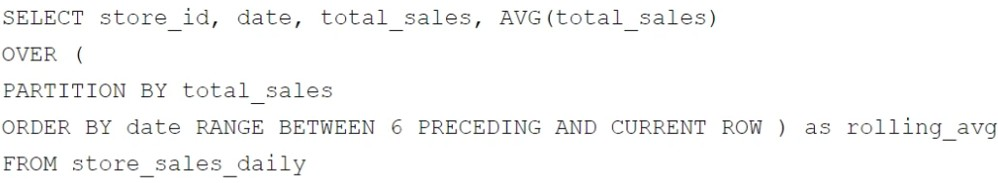

D)

Answer : C

To calculate the weekly moving average of sales by location:

The query must group by store_id (partitioning the calculation by each store).

The ORDER BY date ensures the sales are evaluated chronologically.

The ROWS BETWEEN 6 PRECEDING AND CURRENT ROW specifies a rolling window of 7 rows (1 week if each row represents daily data).

The AVG(total_sales) computes the average sales over the defined rolling window.

Chosen query meets these requirements:

Your company is setting up an enterprise business intelligence platform. You need to limit data access between many different teams while following the Google-recommended approach. What should you do first?

Answer : D

Comprehensive and Detailed In-Depth

For an enterprise BI platform with data access control across teams, Google recommends Looker (Google Cloud core) over Looker Studio for its robust access management. The 'first' step focuses on setting up the foundation.

Option A: Looker Studio reports are lightweight but lack granular access control beyond sharing. Creating separate reports per team is inefficient and unscalable.

Option B: One Looker Studio report with multiple pages and data sources doesn't enforce team-level access control natively---users could access all pages/data.

Option C: Creating a Looker instance with separate dashboards per team is a step forward but skips the foundational access control setup (groups), reducing scalability.

Option D: Setting up a Looker instance and configuring groups aligns with Google's recommendation for enterprise BI. Groups allow role-based access control (RBAC) at the model, Explore, or dashboard level, ensuring teams see only their data. This is the scalable, foundational step per Looker's 'Access Control' documentation. Reference: Looker Documentation - 'Managing Users and Groups' (https://cloud.google.com/looker/docs/admin-users-groups).

Option D: Setting up a Looker instance and configuring groups aligns with Google's recommendation for enterprise BI. Groups allow role-based access control (RBAC) at the model, Explore, or dashboard level, ensuring teams see only their data. This is the scalable, foundational step per Looker's 'Access Control' documentation. Reference: Looker Documentation - 'Managing Users and Groups' (https://cloud.google.com/looker/docs/admin-users-groups).

You are working with a large dataset of customer reviews stored in Cloud Storage. The dataset contains several inconsistencies, such as missing values, incorrect data types, and duplicate entries. You need to clean the data to ensure that it is accurate and consistent before using it for analysis. What should you do?

Answer : B

Using BigQuery to batch load the data and perform cleaning and analysis with SQL is the best approach for this scenario. BigQuery provides powerful SQL capabilities to handle missing values, enforce correct data types, and remove duplicates efficiently. This method simplifies the pipeline by leveraging BigQuery's built-in processing power for both cleaning and analysis, reducing the need for additional tools or services and minimizing complexity.

Your company uses Looker as its primary business intelligence platform. You want to use LookML to visualize the profit margin for each of your company's products in your Looker Explores and dashboards. You need to implement a solution quickly and efficiently. What should you do?

Answer : B

Defining a new measure in LookML to calculate the profit margin using the existing revenue and cost fields is the most efficient and straightforward solution. This approach allows you to dynamically compute the profit margin directly within your Looker Explores and dashboards without needing to pre-calculate or create additional tables. The measure can be defined using LookML syntax, such as:

measure: profit_margin {

type: number

sql: (revenue - cost) / revenue ;;

value_format: '0.0%'

}

This method is quick to implement and integrates seamlessly into your existing Looker model, enabling accurate visualization of profit margins across your products.

You work for a healthcare company. You have a daily ETL pipeline that extracts patient data from a legacy system, transforms it, and loads it into BigQuery for analysis. The pipeline currently runs manually using a shell script. You want to automate this process and add monitoring to ensure pipeline observability and troubleshooting insights. You want one centralized solution, using open-source tooling, without rewriting the ETL code. What should you do?

Answer : A

Comprehensive and Detailed in Depth

Why A is correct:Cloud Composer is a managed Apache Airflow service, which is a popular open-source workflow orchestration tool.

DAGs in Airflow can be used to automate ETL pipelines.

Airflow's web interface and Cloud Monitoring provide comprehensive monitoring capabilities.

It also allows you to run existing shell scripts.

Why other options are incorrect:B: Dataflow requires rewriting the ETL pipeline using its SDK.

C: Dataproc is for big data processing, not orchestration.

D: Cloud Run functions are for stateless applications, not long-running ETL pipelines.

Cloud Composer: https://cloud.google.com/composer/docs

Apache Airflow: https://airflow.apache.org/

You have a BigQuery dataset containing sales dat

a. This data is actively queried for the first 6 months. After that, the data is not queried but needs to be retained for 3 years for compliance reasons. You need to implement a data management strategy that meets access and compliance requirements, while keeping cost and administrative overhead to a minimum. What should you do?

Answer : B

Partitioning the BigQuery table by month allows efficient querying of recent data for the first 6 months, reducing query costs. After 6 months, exporting the data to Coldline storage minimizes storage costs for data that is rarely accessed but needs to be retained for compliance. Implementing a lifecycle policy in Cloud Storage automates the deletion of the data after 3 years, ensuring compliance while reducing administrative overhead. This approach balances cost efficiency and compliance requirements effectively.

You want to process and load a daily sales CSV file stored in Cloud Storage into BigQuery for downstream reporting. You need to quickly build a scalable data pipeline that transforms the data while providing insights into data quality issues. What should you do?

Answer : A

Using Cloud Data Fusion to create a batch pipeline with a Cloud Storage source and a BigQuery sink is the best solution because:

Scalability: Cloud Data Fusion is a scalable, fully managed data integration service.

Data transformation: It provides a visual interface to design pipelines, enabling quick transformation of data.

Data quality insights: Cloud Data Fusion includes built-in tools for monitoring and addressing data quality issues during the pipeline creation and execution process.