Linux Foundation Certified Kubernetes Administrator CKA Exam Practice Test

SIMULATION

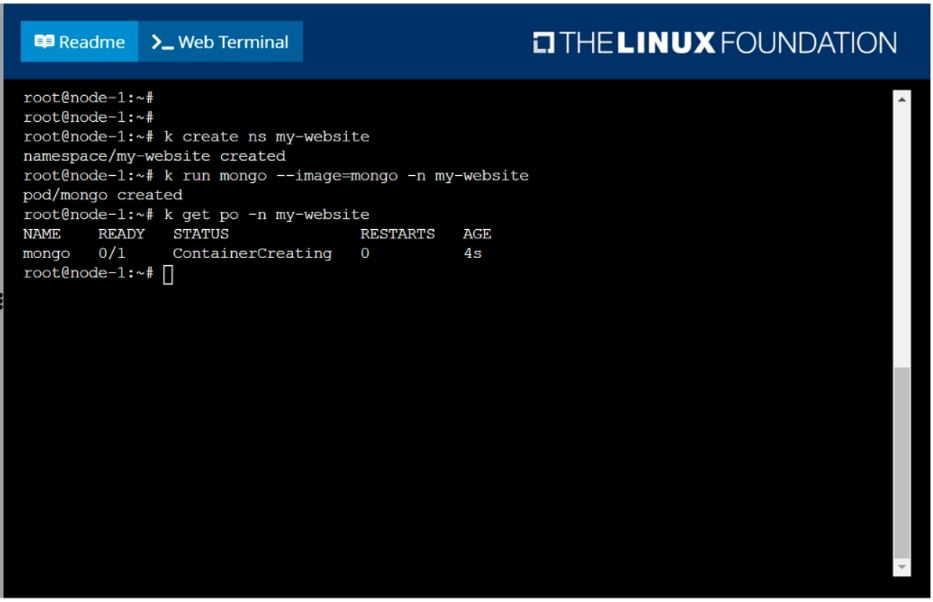

Create a pod as follows:

Name: mongo

Using Image: mongo

In a new Kubernetes namespace named: my-website

Answer : A

solution

SIMULATION

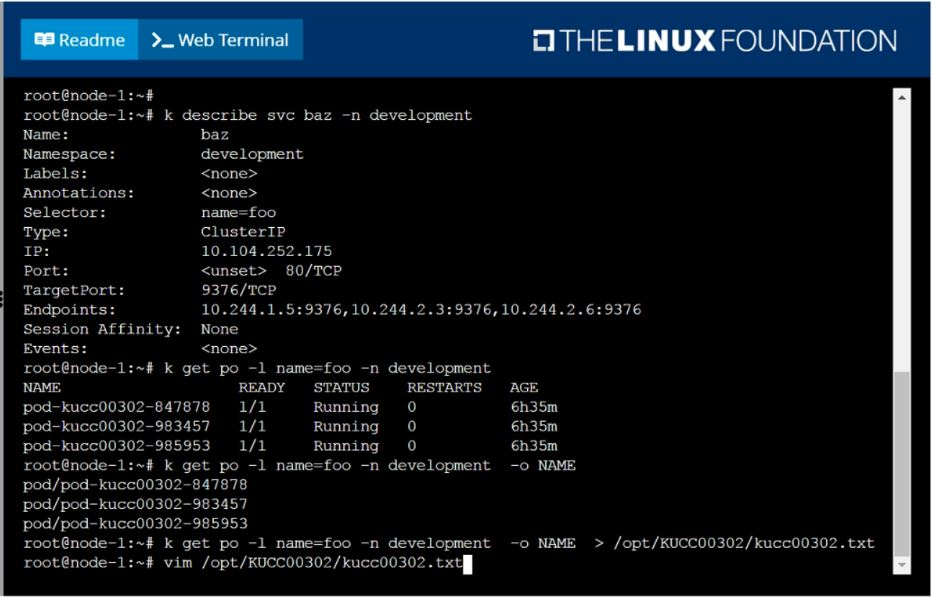

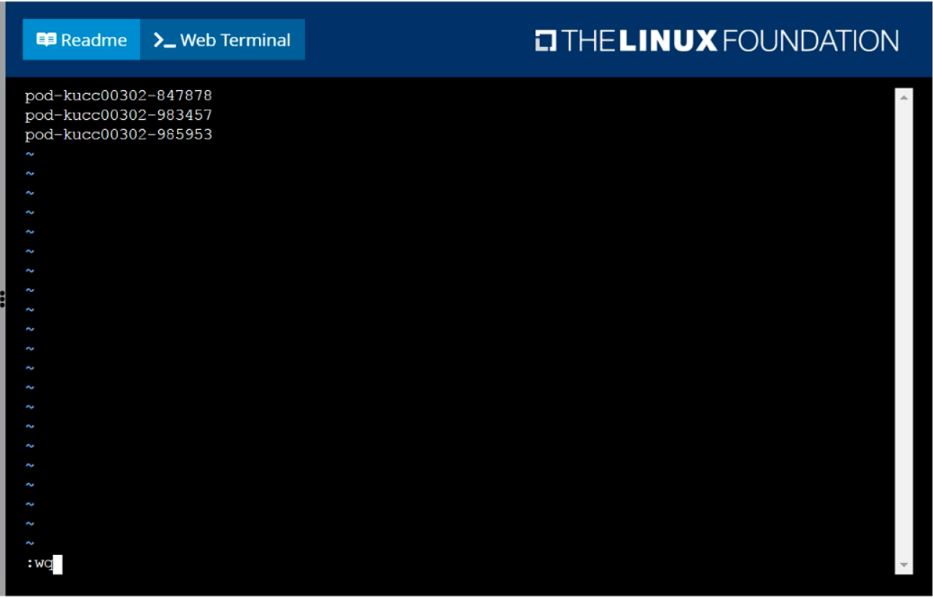

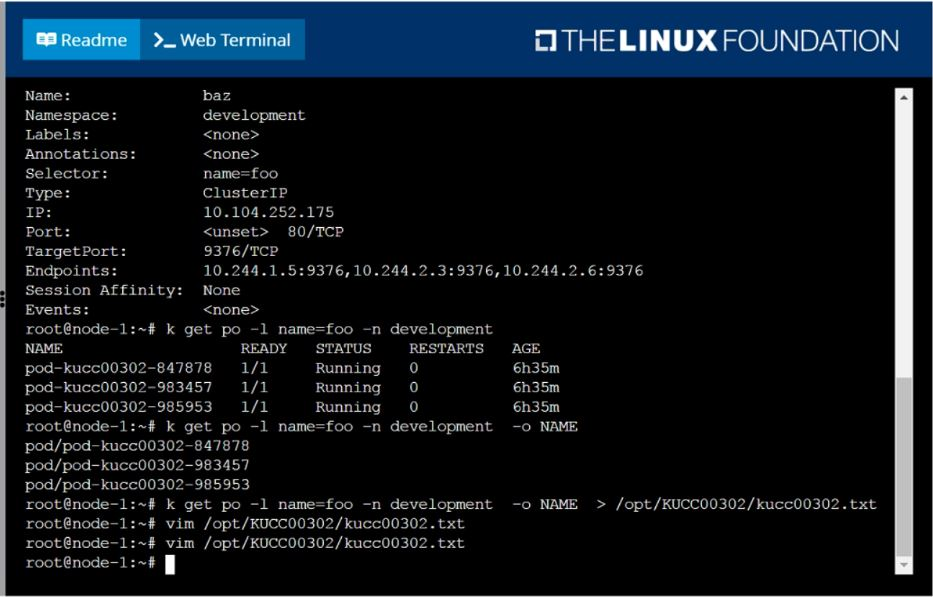

Create a file:

/opt/KUCC00302/kucc00302.txt that lists all pods that implement service baz in namespace development.

The format of the file should be one pod name per line.

Answer : A

solution

SIMULATION

Monitor the logs of pod foo and:

Extract log lines corresponding to error

unable-to-access-website

Write them to/opt/KULM00201/foo

Answer : A

solution

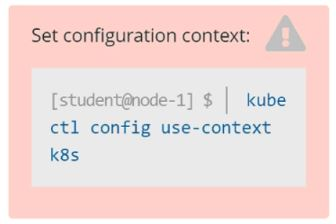

Step 0: Set the correct Kubernetes context

If you're given a specific context (k8s in this case), you must switch to it:

kubectl config use-context k8s

Skipping this can cause you to work in the wrong cluster/namespace and cost you marks.

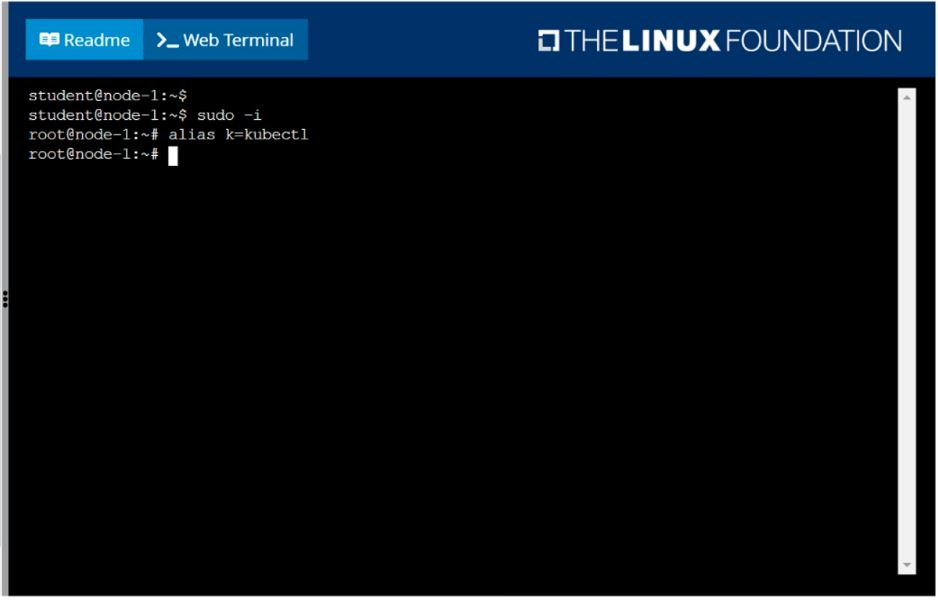

Step 1: Identify the namespace of the pod foo

First, check if foo is running in a specific namespace or in the default namespace.

kubectl get pods --all-namespaces | grep foo

Assume the pod is in the default namespace if no namespace is mentioned.

Step 2: Confirm pod foo exists and is running

kubectl get pod foo

You should get output similar to:

NAME READY STATUS RESTARTS AGE

foo 1/1 Running 0 1h

If the pod is not running, logs may not be available.

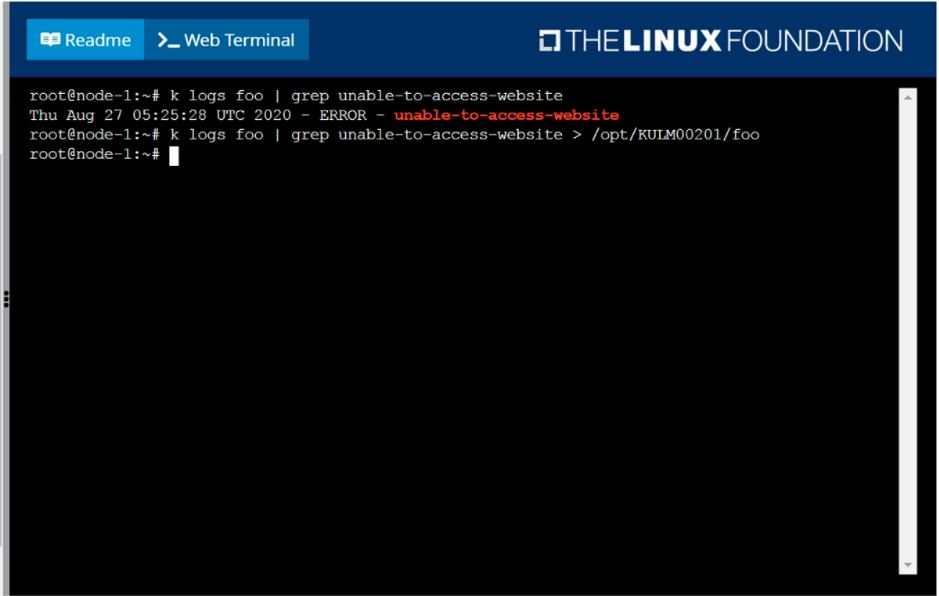

Step 3: View logs and filter specific error lines

We're looking for log lines that contain:

unable-to-access-website

Command:

kubectl logs foo | grep 'unable-to-access-website'

Step 4: Write the filtered log lines to a file

Redirect the output to the required path:

kubectl logs foo | grep 'unable-to-access-website' > /opt/KULM00201/foo

This creates or overwrites the file /opt/KULM00201/foo with the filtered logs.

You may need sudo if /opt requires elevated permissions. But in most exam environments, you're already the root or privileged user.

Step 5: Verify the output file (optional but smart)

Check that the file was created and has the correct content:

cat /opt/KULM00201/foo

Final Answer Summary:

kubectl config use-context k8s

kubectl logs foo | grep 'unable-to-access-website' > /opt/KULM00201/foo

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000058

Context

You manage a WordPress application. Some Pods

are not starting because resource requests are

too high.

Task

A WordPress application in the relative-fawn

namespace consists of:

. A WordPress Deployment with 3 replicas.

Adjust all Pod resource requests as follows:

. Divide node resources evenly across all 3 Pods.

. Give each Pod a fair share of CPU and memory.

Answer : A

Task Summary

You are managing a WordPress Deployment in namespace relative-fawn.

Deployment has 3 replicas.

Pods are not starting due to high resource requests.

Your job: Adjust CPU and memory requests so that all 3 pods evenly split the node's capacity.

Step-by-Step Solution

1 SSH into the correct host

bash

CopyEdit

ssh cka000058

Skipping this will result in a zero score.

2 Check node resource capacity

You need to know the node's CPU and memory resources.

bash

CopyEdit

kubectl describe node | grep -A5 'Capacity'

Example output:

yaml

CopyEdit

Capacity:

cpu: 3

memory: 3Gi

Let's assume the node has:

3 CPUs

3Gi memory

So for 3 pods, divide evenly:

CPU request per pod: 1

Memory request per pod: 1Gi

In the actual exam, check real values and divide accordingly. If the node has 4 CPUs and 8Gi, you'd allocate ~1.33 CPUs and ~2.66Gi RAM per pod (rounded reasonably).

3 Edit the Deployment

Edit the WordPress deployment in the relative-fawn namespace:

kubectl edit deployment wordpress -n relative-fawn

Look for the resources section under spec.template.spec.containers like this:

resources:

requests:

cpu: '1'

memory: '1Gi'

If the section doesn't exist, add it manually.

Save and exit the editor (:wq if using vi).

4 Confirm changes

Wait a few seconds, then check:

kubectl get pods -n relative-fawn

Ensure all 3 pods are in Running state.

You can also describe a pod to confirm resource requests are set:

kubectl describe pod

ssh cka000058

kubectl describe node | grep -A5 'Capacity'

kubectl edit deployment wordpress -n relative-fawn

# Set CPU: 1, Memory: 1Gi (or according to node capacity)

kubectl get pods -n relative-fawn

SIMULATION

List pod logs named ''frontend'' and search for the pattern ''started'' and write it to a file ''/opt/error-logs''

Answer : A

Kubectl logs frontend | grep -i ''started'' > /opt/error-logs

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000049

Task

Perform the following tasks:

Create a new PriorityClass named high-priority for user-workloads with a value that is one less

than the highest existing user-defined priority class value.

Patch the existing Deployment busybox-logger running in the priority namespace to use the high-priority priority class.

Answer : A

Task Summary

SSH into the correct node: cka000049

Find the highest existing user-defined PriorityClass

Create a new PriorityClass high-priority with a value one less

Patch Deployment busybox-logger (in namespace priority) to use this new PriorityClass

Step-by-Step Solution

1 SSH into the correct node

bash

CopyEdit

ssh cka000049

Skipping this = zero score

2 Find the highest existing user-defined PriorityClass

Run:

bash

CopyEdit

kubectl get priorityclasses.scheduling.k8s.io

Example output:

vbnet

CopyEdit

NAME VALUE GLOBALDEFAULT AGE

default-low 1000 false 10d

mid-tier 2000 false 7d

critical-pods 1000000 true 30d

Exclude system-defined classes like system-* and the default global one (e.g., critical-pods).

Let's assume the highest user-defined value is 2000.

So your new class should be:

Value = 1999

3 Create the high-priority PriorityClass

Create a file called high-priority.yaml:

cat <<EOF > high-priority.yaml

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1999

globalDefault: false

description: 'High priority class for user workloads'

EOF

Apply it:

kubectl apply -f high-priority.yaml

4 Patch the busybox-logger deployment

Now patch the existing Deployment in the priority namespace:

kubectl patch deployment busybox-logger -n priority \

--type='merge' \

-p '{'spec': {'template': {'spec': {'priorityClassName': 'high-priority'}}}}'

5 Verify your work

Confirm the patch was applied:

kubectl get deployment busybox-logger -n priority -o jsonpath='{.spec.template.spec.priorityClassName}'

You should see:

high-priority

Also, confirm the class exists:

kubectl get priorityclass high-priority

Final Command Summary

ssh cka000049

kubectl get priorityclass

# Create the new PriorityClass

cat <<EOF > high-priority.yaml

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1999

globalDefault: false

description: 'High priority class for user workloads'

EOF

kubectl apply -f high-priority.yaml

# Patch the deployment

kubectl patch deployment busybox-logger -n priority \

--type='merge' \

-p '{'spec': {'template': {'spec': {'priorityClassName': 'high-priority'}}}}'

# Verify

kubectl get deployment busybox-logger -n priority -o jsonpath='{.spec.template.spec.priorityClassName}'

kubectl get priorityclass high-priority

SIMULATION

Create a pod with environment variables as var1=value1.Check the environment variable in pod

Answer : A

kubectl run nginx --image=nginx --restart=Never --env=var1=value1

# then

kubectl exec -it nginx -- env

# or

kubectl exec -it nginx -- sh -c 'echo $var1'

# or

kubectl describe po nginx | grep value1