Qlik Sense Data Architect Certification Exam - 2024 QSDA2024 Exam Practice Test

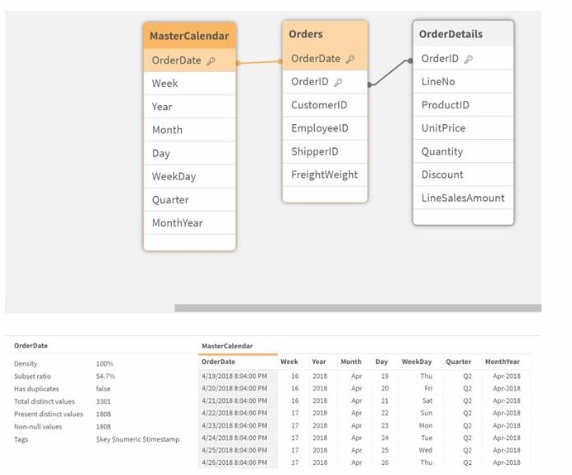

Exhibit.

Refer to the exhibit.

A business analyst informs the data architect that not all analysis types over time show the expected data.

Instead they show very little data, if any.

Which Qlik script function should be used to resolve the issue in the data model?

Answer : D

In the provided data model, there is an issue where certain types of analysis over time are not showing the expected data. This problem is often caused by a mismatch in the data formats of the OrderDate field between the Orders and MasterCalendar tables.

Option A: DatefFloor(OrderDate)) would round down to the nearest date boundary, which might not address the root cause if the issue is related to different date and time formats.

Option B: TimeStamp#(OrderDate, 'M/D/YYYY hh.mm.ff') ensures that the date is interpreted correctly as a timestamp, but this does not resolve potential mismatches in date format directly.

Option C: TimeStamp(OrderDate) will keep both date and time, which may still cause mismatches if the MasterCalendar is dealing purely with dates.

Option D: Date(OrderDate) formats the OrderDate to show only the date portion (removing the time part). This function will ensure that the date values are consistent across the Orders and MasterCalendar tables by converting the timestamps to just dates. This is the most straightforward and effective way to ensure consistency in date-based analysis.

In Qlik Sense, dates and timestamps are stored as dual values (both text and numeric), and mismatches can lead to incomplete or incorrect analyses. By using Date(OrderDate) in both the Orders and MasterCalendar tables, you ensure that the analysis will have consistent date values, resolving the issue described.

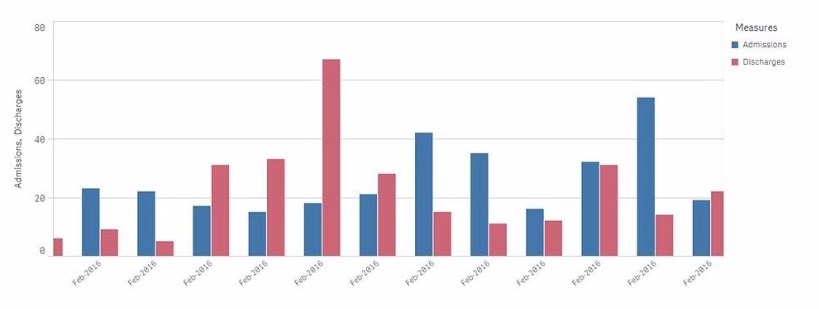

Exhibit.

A chart for monthly hospital admissions and discharges incorrectly displays the month and year values on the x-axis.

The date format for the source data field "Common Date" is M/D/YYYY. This format was used in a calculated field named "Month-Year" in the data manager when the data model was first built.

Which expression should the data architect use to fix this issue?

Answer : A

The issue described relates to the incorrect display of month and year values on the x-axis of a chart. The source data has dates in the M/D/YYYY format, and a calculated field named Month-Year was created using this date format.

To correct the issue:

The correct approach is to use the MonthStart() function, which returns the first date of the month for the provided date. This ensures consistency in month-year representation.

The Date() function is then used to format the result of MonthStart() to the desired format of MMM-YYYY (e.g., Feb-2018).

Explanation of the Correct Expression:

MonthStart([Common Date]): This ensures that all dates within a month are treated as the first day of that month, which is critical for accurate monthly aggregation.

Date(..., 'MMM-YYYY'): This formats the result to show just the month and year in the correct format.

Using this expression ensures that the x-axis correctly displays the month-year values.

A data architect needs to load large amounts of data from a database that is continuously updated.

* New records are added, and existing records get updated and deleted.

* Each record has a LastModified field.

* All existing records are exported into a QVD file.

* The data architect wants to load the records into Qlik Sense efficiently.

Which steps should the data architect take to meet these requirements?

Answer : D

When dealing with a database that is continuously updated with new records, updates, and deletions, an efficient data load strategy is necessary to minimize the load time and keep the Qlik Sense data model up-to-date.

Explanation of Steps:

Load the existing data from the QVD:

This step retrieves the already loaded and processed data from a previous session. It acts as a base to which new or updated records will be added.

Load new and updated data from the database. Concatenate with the table loaded from the QVD:

The next step is to load only the new and updated records from the database. This minimizes the amount of data being loaded and focuses on just the changes.

The new and updated records are then concatenated with the existing data from the QVD, creating a combined dataset that includes all relevant information.

Create a separate table for the deleted rows and use a WHERE NOT EXISTS to remove these records:

A separate table is created to handle deletions. The WHERE NOT EXISTS clause is used to identify and remove records from the combined dataset that have been deleted in the source database.

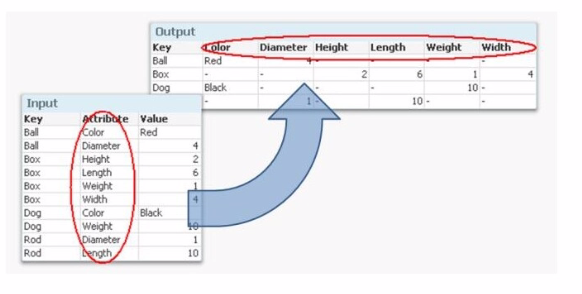

Exhibit.

Refer to the exhibit.

A data architect wants to transform the input data set to the output data set. Which prefix to the Qlik Sense LOAD command should the data architect use?

Answer : C

In this scenario, the data architect wants to transform the input dataset, which is in a key-value pair structure, into a table where each attribute becomes a column with its corresponding value under the relevant key.

Understanding the Requirement:

The input data consists of three fields: Key, Attribute, and Value.

The desired output structure has the Key as a primary identifier, and the Attributes (like Color, Diameter, Height, etc.) are spread across the columns, with corresponding values filled in each row.

Best Method to Achieve this Transformation:

The appropriate method to convert key-value pairs into a structured table where each unique attribute becomes a separate column is the Generic Load function in Qlik Sense.

Why Generic?

Generic Load is specifically designed for situations where data is stored in a key-value format (like the one provided) and needs to be converted into a more traditional tabular format, with attributes as columns.

It creates a separate table for each combination of Key and Attribute, effectively 'pivoting' the attribute values into columns in the output table.

How it Works:

When applying a GENERIC LOAD to the input dataset, Qlik Sense will generate multiple tables, one for each Attribute. However, in the final data model, Qlik Sense automatically joins these tables by the Key field, effectively producing the desired output structure.

Qlik Sense Documentation on Generic Load: The documentation outlines how to use the Generic Load to handle key-value pairs and pivot them into a more traditional table format.

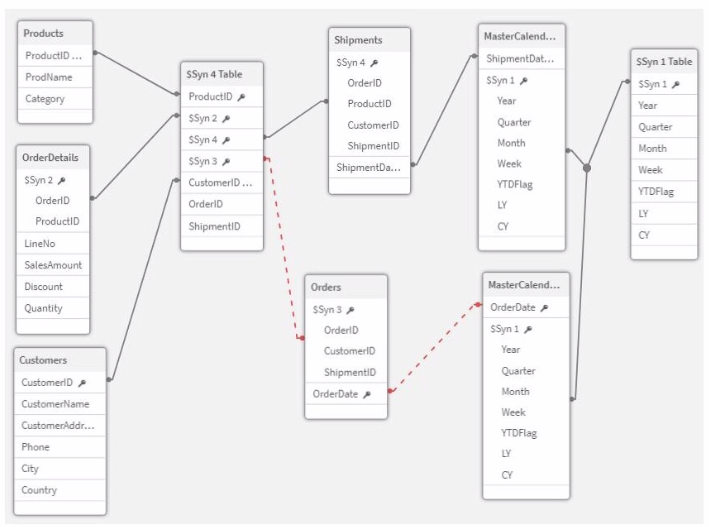

Exhibit.

Refer to the exhibit.

A data architect is working on a Qlik Sense app the business has created to analyze the company orders and shipments.

To understand the table structure, the business has given the following summary:

* Every order creates a unique orderlD and an order date in the Orders table

* An order can contain one or more order lines one for each product ID in the order details table

* Products In the order are shipped (shipment date) as soon as they are ready and can be shipped separately

* The dates need to be analyzed separately by Year, Month, and Quarter

The data architect realizes the data model has issues that must be fixed. Which steps should the data architect perform?

Answer : C

In the given data model, there are several issues related to table relationships and key fields that need to be addressed to create a functional and optimized data model. Here's how each step in the chosen solution (Option C) resolves these issues:

Create a key with OrderID and ProductID in the OrderDetails table and in the Shipments table:

By creating a composite key with OrderID and ProductID, you uniquely identify each line item in both the OrderDetails and Shipments tables. This step is crucial for ensuring that each product within an order is correctly associated with its respective shipment.

Delete the ShipmentID in the Orders table:

The ShipmentID in the Orders table is redundant because the Shipments table already captures this information at a more granular level (i.e., at the product level). Removing ShipmentID avoids potential circular references or synthetic keys.

Delete the ProductID and OrderID in the Shipments table:

After creating the composite key in step 1, the individual ProductID and OrderID fields in the Shipments table are no longer necessary for joins. Removing them reduces redundancy and simplifies the table structure.

Concatenate Orders and OrderDetails:

Concatenating Orders and OrderDetails into a single table creates a unified table that contains all necessary order-related information. This helps in simplifying the model and avoiding issues related to managing separate but related tables.

Create a link table using the MasterCalendar table and create a concatenated field between OrderDate and ShipmentDate:

A link table is created to associate the combined table with the MasterCalendar. By creating a concatenated field that combines OrderDate and ShipmentDate, you ensure that both dates are properly linked to the calendar, allowing for accurate time-based analysis.

A data architect needs to load data from two different databases. Additional data will be added from a folder that contains QVDs, text files, and Excel files.

What is the minimum number of data connections required?

Answer : B

In the scenario, the data architect needs to load data from two different databases, and additional data is located in a folder containing QVDs, text files, and Excel files.

Minimum Number of Data Connections Required:

Database Connections:

Each database requires a separate data connection. Therefore, two data connections are needed for the two databases.

Folder Connection:

A single folder data connection can be used to access all the QVDs, text files, and Excel files in the specified folder. Qlik Sense allows you to create a folder connection that can access multiple file types within that folder.

Total Connections:

Two Database Connections: One for each database.

One Folder Connection: To access the QVDs, text files, and Excel files.

Therefore, the minimum number of data connections required is two.

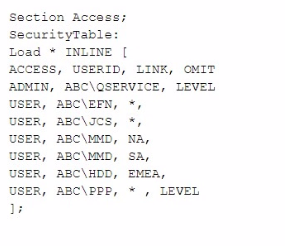

Exhibit.

The Section Access security table for an app is shown. User ABC\PPP opens a Qlik Sense app with a table using the field called LEVEL on one of the table columns.

Which is the result?

Answer : D

In this scenario, the Section Access security table controls user access to data within the Qlik Sense app. The user in question, ABC\PPP, has a specific entry in the security table that determines their access rights to the LEVEL field.

Understanding Section Access:

Section Access is used to enforce security by restricting access to certain data based on the user's credentials.

In the security table provided, the USER role for ABC\PPP is set to have access to all data (* in the LINK field), but the OMIT field is set to LEVEL. The OMIT field in Section Access specifies fields that should be omitted from the user's view.

Outcome:

Since the OMIT field for user ABC\PPP is set to LEVEL, this user will not have access to the LEVEL field in the Qlik Sense application.

Option D: The table is displayed without the LEVEL column is the correct outcome.

Qlik Sense Security and Section Access Documentation: The OMIT functionality in Section Access is specifically designed to remove fields from the user's access, ensuring that sensitive or unnecessary data is not exposed.