Snowflake SnowPro Advanced: Architect Recertification ARA-R01 Exam Practice Test

The following table exists in the production database:

A regulatory requirement states that the company must mask the username for events that are older than six months based on the current date when the data is queried.

How can the requirement be met without duplicating the event data and making sure it is applied when creating views using the table or cloning the table?

Answer : C

The other options are not correct because:

A) Using a masking policy on the username column using an entitlement table with valid dates would require creating another table that stores the valid dates for each username, and joining it with the user_events table in the masking policy function. This would add complexity and overhead to the masking policy, and it would not use the event_timestamp column as the condition for masking.

B) Using a row level policy on the user_events table using an entitlement table with valid dates would require creating another table that stores the valid dates for each username, and joining it with the user_events table in the row access policy function. This would filter out the rows that have event_timestamp older than six months based on the valid dates, instead of masking the username column. This would not meet the requirement of masking the username, and it would also reduce the visibility of the event data.

1:Masking Policies | Snowflake Documentation

2: Using Conditional Columns in Masking Policies | Snowflake Documentation

A Snowflake Architect is setting up database replication to support a disaster recovery plan. The primary database has external tables.

How should the database be replicated?

Answer : B

Database replication is a feature that allows you to create a copy of a database in another account, region, or cloud platform for disaster recovery or business continuity purposes. However, not all database objects can be replicated. External tables are one of the exceptions, as they reference data files stored in an external stage that is not part of Snowflake. Therefore, to replicate a database that contains external tables, you need to move the external tables to a separate database that is not replicated, and then replicate the primary database that contains the other objects. This way, you can avoid replication errors and ensure consistency between the primary and secondary databases. The other options are incorrect because they either do not address the issue of external tables, or they use an alternative method that is not supported by Snowflake. You cannot create a clone of the primary database and then replicate it, as replication only works on the original database, not on its clones. You also cannot share the primary database with another account, as sharing is a different feature that does not create a copy of the database, but rather grants access to the shared objects. Finally, you do not need to ensure that the replicated database is in the same region as the external tables, as external tables can access data files stored in any region or cloud platform, as long as the stage URL is valid and accessible.Reference:

[Replication and Failover/Failback]1

[Introduction to External Tables]2

[Working with External Tables]3

[Replication : How to migrate an account from One Cloud Platform or Region to another in Snowflake]4

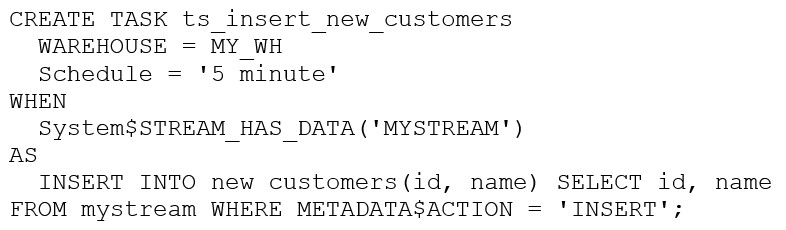

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend -- 60 and used exclusively for this task, which statement is true?

Answer : B

The warehouse MY_WH will only be active when there are results in the stream. This is because the task is created based on a stream, which means that the task will only be executed when there are new data in the stream. Additionally, the warehouse is set to auto_suspend - 60, which means that the warehouse will automatically suspend after 60 seconds of inactivity. Therefore, the warehouse will only be active when there are results in the stream.Reference:

[CREATE TASK | Snowflake Documentation]

[Using Streams and Tasks | Snowflake Documentation]

[CREATE WAREHOUSE | Snowflake Documentation]

Which of the below commands will use warehouse credits?

Answer : B, C, D

Among the commands listed in the question, the following ones will use warehouse credits:

The command that will not use warehouse credits is:

A Developer is having a performance issue with a Snowflake query. The query receives up to 10 different values for one parameter and then performs an aggregation over the majority of a fact table. It then

joins against a smaller dimension table. This parameter value is selected by the different query users when they execute it during business hours. Both the fact and dimension tables are loaded with new data in an overnight import process.

On a Small or Medium-sized virtual warehouse, the query performs slowly. Performance is acceptable on a size Large or bigger warehouse. However, there is no budget to increase costs. The Developer

needs a recommendation that does not increase compute costs to run this query.

What should the Architect recommend?

Answer : C

Enabling the search optimization service on the table can improve the performance of queries that have selective filtering criteria, which seems to be the case here. This service optimizes the execution of queries by creating a persistent data structure called a search access path, which allows some micro-partitions to be skipped during the scanning process. This can significantly speed up query performance without increasing compute costs1.

Reference

* Snowflake Documentation on Search Optimization Service1.

What is a key consideration when setting up search optimization service for a table?

Answer : A

A. The Search Optimization Service is designed to accelerate the performance of queries that use filters on large tables. One of the key considerations for its effectiveness is using it with tables where the columns used in the filter conditions have a high number of distinct values, typically in the hundreds of thousands or more. This is because the service creates a map-reduce-like index on the column to speed up queries that use point lookups or range scans on that column. The more unique values there are, the more effective the index is at narrowing down the search space. Reference: Snowflake documentation and best practices on the Search Optimization Service, which would be covered under the SnowPro Advanced: Architect certification materials.

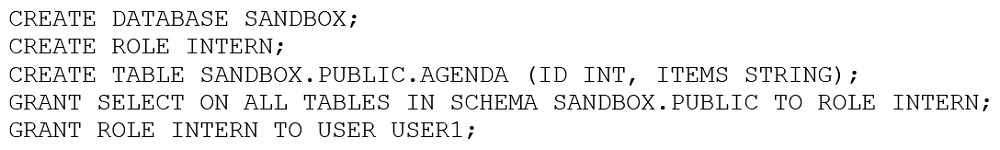

An Architect entered the following commands in sequence:

USER1 cannot find the table.

Which of the following commands does the Architect need to run for USER1 to find the tables using the Principle of Least Privilege? (Choose two.)

Answer : B, C

According to the Principle of Least Privilege, the Architect should grant the minimum privileges necessary for the USER1 to find the tables in the SANDBOX database.

The USER1 needs to have USAGE privilege on the SANDBOX database and the SANDBOX.PUBLIC schema to be able to access the tables in the PUBLIC schema. Therefore, the commands B and C are the correct ones to run.

The command A is not correct because the PUBLIC role is automatically granted to every user and role in the account, and it does not have any privileges on the SANDBOX database by default.

The command D is not correct because it would transfer the ownership of the SANDBOX database from the Architect to the USER1, which is not necessary and violates the Principle of Least Privilege.

The command E is not correct because it would grant all the possible privileges on the SANDBOX database to the USER1, which is also not necessary and violates the Principle of Least Privilege.